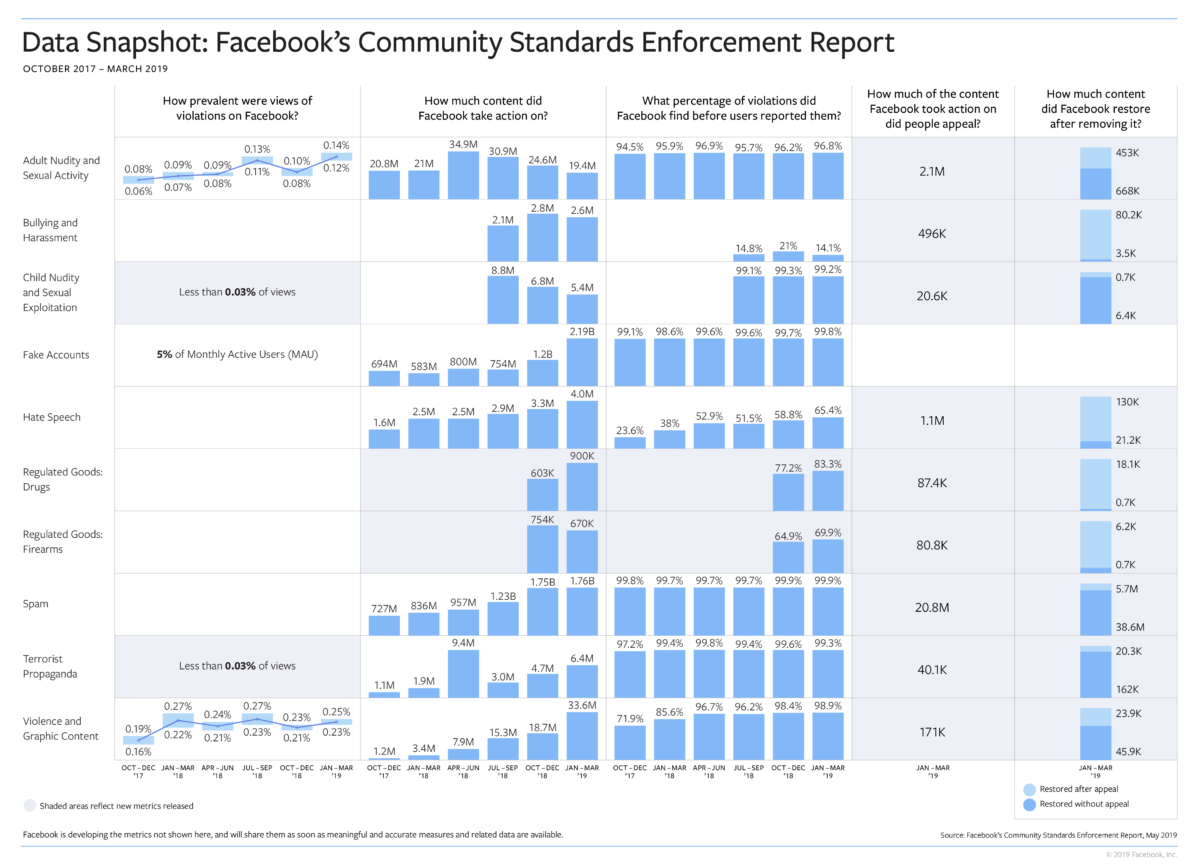

Facebook has released a new Community Standards Enforcement Report, which focuses on Q4 2018 and Q1 2019 and shows how Facebook has been successful in detecting content that violates community rules.

The report contains data and metrics that show which categories are the most problematic in terms of violations of Facebook standards and how many cases are appealed and rejected and include reports on regulated goods standards.

Facebook has updated the list of violation categories, which now includes nine metrics:

- Adult nudity and sexual activity

- Bullying and harassment

- Child nudity and sexual exploitation of children

- Fake accounts

- Hate speech

- Regulated goods: drugs and firearms

- Spam

- Terrorist propaganda

- Violence and graphic content

The following graph shows very interesting reports measuring how successful Facebook is in detecting content that violates community policies:

- How many violations per category were detected per period.

- What the percentage of detection was that Facebook had before the rules were signed by users.

- How many times users have appealed rejected content.

- How much content has been restored by Facebook after revocation.

In some categories, Facebook has a great success rate of detection—around 90-99.9% when it comes to nudity, sexual subtitles, pornography, spam, and terrorism. But with bullying and harassment, Facebook identified only 14% of the 2.6 million cases. With hate speech, 65.4% of the 4 million cases were identified, as well as 69.9% of the 670,000 cases of regulated goods.

Another widespread breach of standards where Facebook is successful and recognizes 99.8% of the 2.2 billion cases is the creation of fake accounts. In comparison with the first quarter, false accounts increased by 1 billion.

Facebook also deals with spam, removing more than 1.76 billion pieces of content.

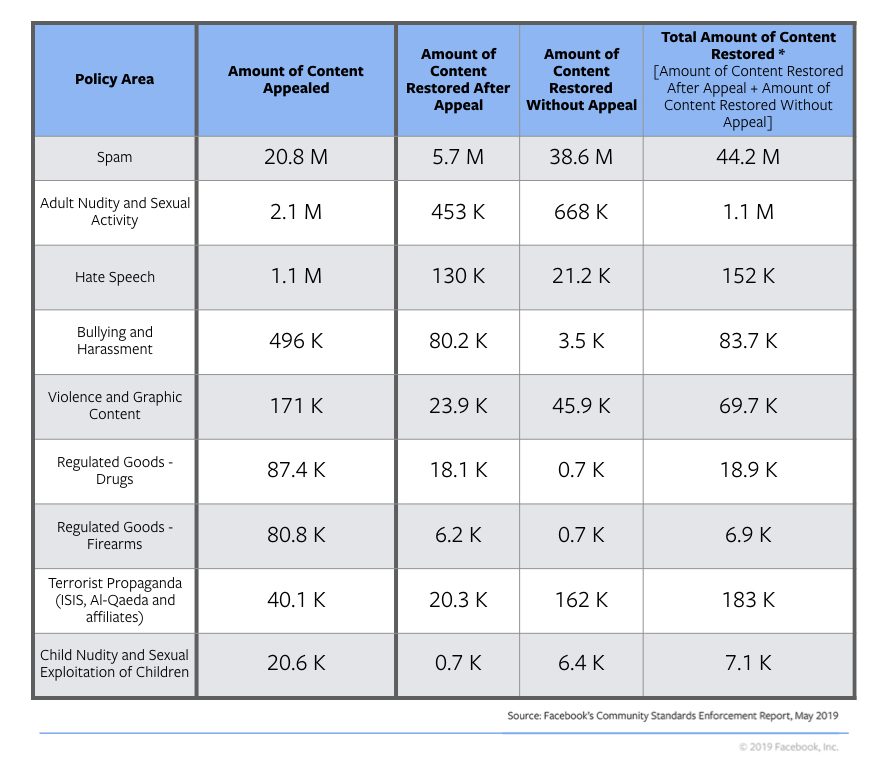

Also interesting is the report that relates to the amount of appealed and restored content after a Facebook check in each category:

You can read the complete report in the following link.