Despite all of their security measures, social networking sites are constantly struggling with user activity that violates community policies, whether it’s creating fake user profiles, fake news or inappropriate content. To this extent, Facebook issued its fourth transparency report, which shows how the platform proceeds in case of violations.

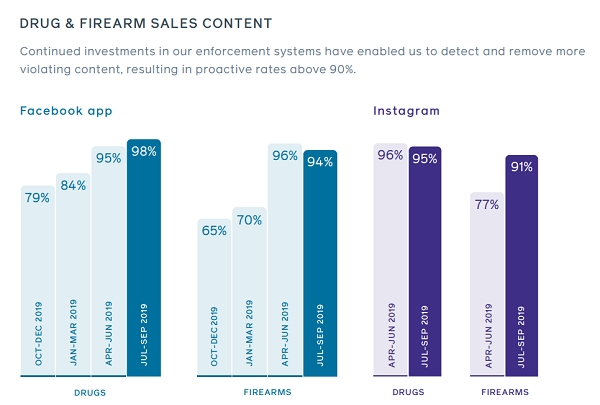

Facebook is constantly improving automatic detection systems and methods for recognizing inappropriate content. The official report on the subject includes some interesting figures and shows progress compared to previous periods:

- Facebook is seeing a higher level of recognition of content related to drugs, firearms, nudity and child exploitation.

- In the first quarter of 2019, Facebook removed 5.8 million posts that violated children’s rights, and in the third quarter of 2019, up to 11.6 million pieces of content.

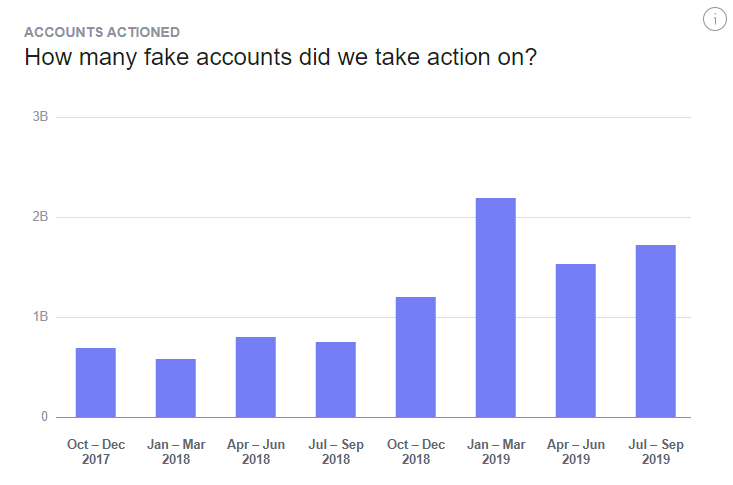

- Unfortunately, the number of fake accounts continues to increase. In 2019, Facebook removed 5.4 billion fake profiles. The detection of them improved, but in general, this number increased by 3.8 billion compared to last year. Facebook says about 5% of accounts across its community are fake.

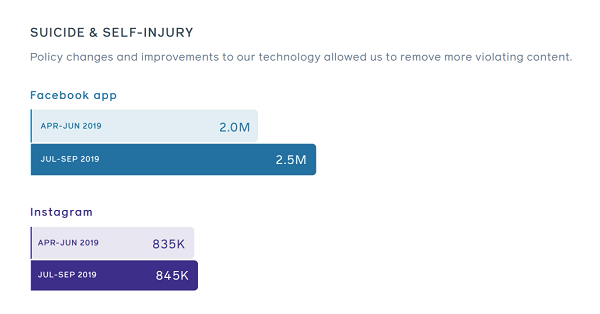

- Facebook also said that the number of removed content related to suicide or self-harm is also increasing.

- Facebook is also focusing more on combating terrorism-related content, a focus in which it is investing more money.

If you want to learn more about the issue, read the complete report.