Facebook introduced a 25-page document with criteria and examples of content that is and is not allowed on the platform. The goal is to clarify the rules and remove violence, harassment, terrorism, theft of intellectual property or hate speech from the social network.

These rules will be translated into more than 40 languages for the public. There are currently over 7500 content reviewers working for Facebook, which is 40% more than a year ago. Additionally, moderators will have access to advice and resources 24/7, as many situations can be complicated and tricky. Besides the team of reviewers, Facebook also uses artificial intelligence to detect and remove content.

A complete document with rules is available in Facebook’s community policies and addresses points like:

- Violence and criminal behavior

- Security

- Unacceptable content

- Integrity and authenticity

- Respecting intellectual property

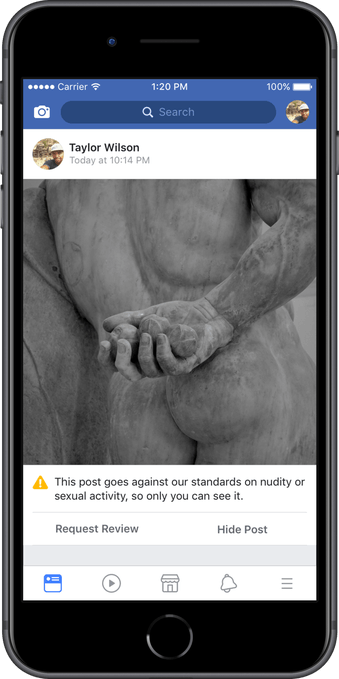

Facebook also plans to modify the appeal removal process. So far, users have been able to request a review of the removal of the page, profile, or group. Facebook now alerts users that their content has been removed with the removal reason explanation. Users will have the “Request Review” option within 24 hours to request a check for the content. Facebook will also expand Community Standards Forums in Germany, France, Great Britain, India, Singapore and the USA.

Facebook wants to be a safe place where people can discuss different topics. Therefore, it needs clear rules, which are called Community Standards.